Large convolutional nets for object classifications are pretty awesome at classifying objects. Since Googles deep dream discovery they’re awesome at other stuff as well. The input into a classification net is an image, and the output a classification, but in between there are layers transforming the image into a classification. What the deep dream project did was using those intermediate layers as target for the image, then tweaking the image in a way that enhances the features of a particular layer. Typically, layers close to the image will represent discovered shapes and textures, while layers closer to the classification will represent higher abstractions closer to the classes - like dogs and cars. Lots of fun can be had when selecting how these intermediate layers should be enhanced. Here are a couple of ways to use intermediate layers as targets, and lots more has enabled things like Neural style, Neural doodle.

However, tweaking images using gradient decent is quite slow. Neural style images are typically generated by evaluating the transforming image on the neural network 500-2000 times. That can take minutes even on GPUs.

Johnson et al. came up with the idea of training a separate network to do such an image transformation in a single pass. By training a transformation network with these cool target functions one can get similar results as tweaking the image, but in a single pass through an already trained transformation network. That’ll make it possible to do such transformation tasks on video in real time. Pretty cool!

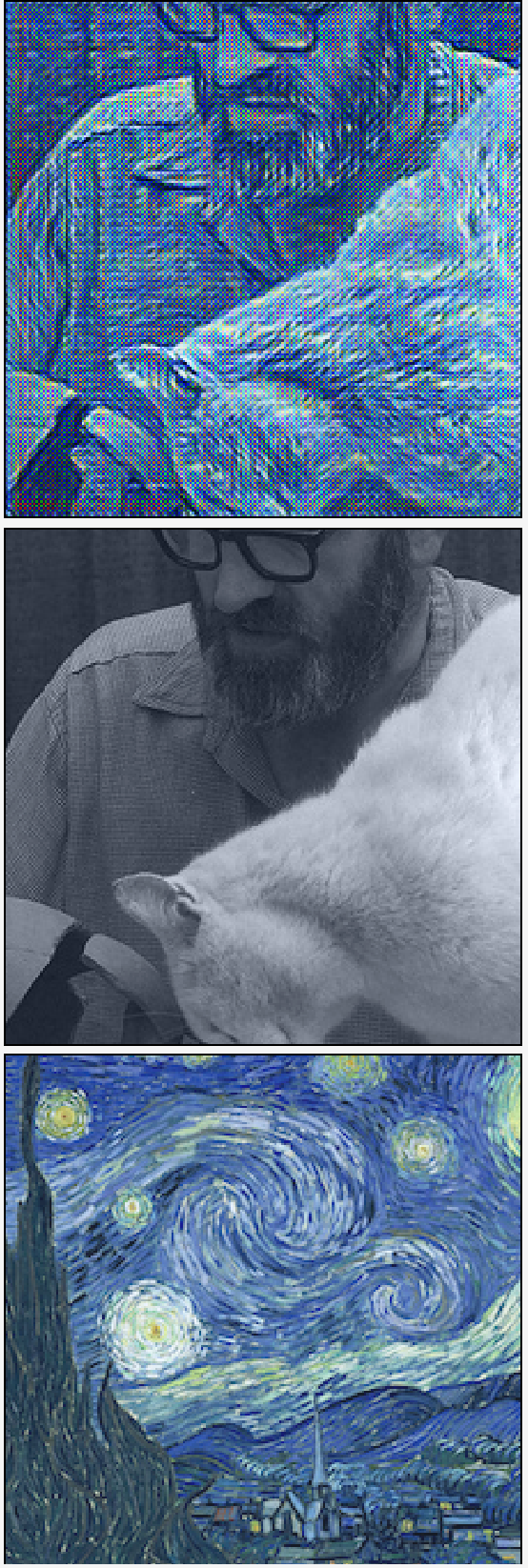

I’ve implemented the model described in Johnson et. al here using tensorflow.

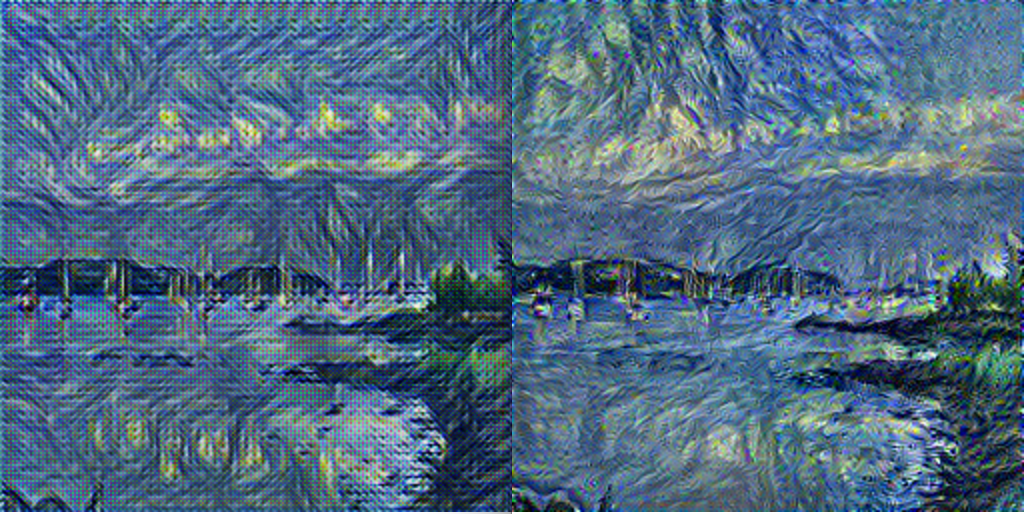

It works pretty well, but there is some noise in the generated images. Here you can see two images side by side - one generated by iterating directly on the content image and style image, while the other one is generated by passing a content image through a transformation network already trained to optimise the style transformation. Code for both on github